Support static dimensions (aka IndexList) in resizing/reshape/broadcast.

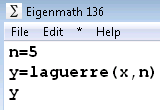

#Eigenmath 1.3 update

Update the padding computation for PADDING_SAME to be consistent with TensorFlow.Improve randomness of the tensor random generator.More numerically stable tree reduction.Modify tensor argmin/argmax to always return first occurrence.device (thread_pool_device, std :: move (done ) ) = in1 + in2 * 3.14f makeCompressed ( ) // Recommendation is to compress input before calling sparse solvers. SVD implementations now have an info() method for checking convergence.Ī.All dense linear solvers (i.e., Cholesky, *LU, *QR, CompleteOrthogonalDecomposition, *SVD) now inherit SolverBase and thus support.New elementwise functions for absolute_difference, rint.Improved special function support (Bessel and gamma functions, ndtri, erfc, inverse hyperbolic functions and more).Speedups from (new or improved) vectorized versions of pow, log, sin, cos, arg, pow, log2, complex sqrt, erf, expm1, logp1, logistic, rint, gamma and bessel functions, and more.New faithfully rounded algorithm for pow(x,y).New implementation of the Payne-Hanek for argument reduction algorithm for sin and cos with huge arguments.fixes for corner cases, NaN/Inf inputs and singular points of many functions. Many improvements to correctness, accuracy, and compatibility with c++ standard library.Many functions are now implemented and vectorized in generic (backend-agnostic) form.Improved or added vectorization of partial or slice reductions along the outer-dimension, for instance: colmajor_mat.rowwise().mean().Faster specialized SIMD kernels for small fixed-size inverse, LU decomposition, and determinant.operations by propagating compile-time sizes (col/row-wise reverse, PartialPivLU, and others)

Large speed up from blocked algorithm for.Performance of many special cases of matrix products has been improved.The performance of matrix products using Arm Neon has been drastically improved (up to 20%).Eigen's GEMM now falls back to GEMV if it detects that a matrix is a run-time vector.By using half- and quater-packets the performance of matrix multiplications of small to medium sized matrices has been improved.Various performance improvements for products and Eigen's GEBP and GEMV kernels have been implemented:.Users targeting C++17 only and recent compilers (e.g., GCC>=7, clang>=5, MSVC>=19.12) will thus be able to completely forget about all issues related to static alignment, including EIGEN_MAKE_ALIGNED_OPERATOR_NEW. Eigen now uses the c++11 alignas keyword for static alignment.

0 kommentar(er)

0 kommentar(er)